Deploying Kubernetes with Cluster API

Helio provides carbon-aware cloud computing to its customers. To lower emissions, we're shifting workloads to data centers worldwide - leveraging idle computing power and optimizing for the best energy mix.

This comes with a few technical challenges, some of which are:

- Accessing and scaling the infrastructure of 100+ clouds in a unified way.

- Doing automated scheduling decisions based on capacity, emissions, and workload efficiency.

In this blog post, you'll learn how we solve some of the challenges with Cluster API. But first, a quick overview of Helio core:

- We use Kubernetes to unify access to our compute at different locations in the world behind a common API.

- Our infrastructure setup consists of control clusters with several attached workload clusters which are each independent Kubernetes clusters.

- We use our multicluster operator to schedule argo workflows to the right workload cluster.

Up until now, bootstrapping a new cluster in a data center involved a lot of manual work. Each supplier has a different underlying infrastructure. Even if we target hyperscalers, the setup can be quite daunting. Especially providing windows support for rendering is a challenging task in the containerized world.

Recently we decided to partner with two new data centers: Leafcloud and Ventus cloud. Both cloud providers are based on OpenStack. Not being experts in OpenStack we were looking for a new way to attach them to our platform.

Enter Cluster API

The Cluster API aims to provide a Kubernetes-native API to simplify provisioning, upgrading, and operating multiple Kubernetes clusters. Having recently achieved production readiness, this is a perfect fit for Helio's use case.

Cluster API provides the following:

- a unified declarative way to bootstrap and manage Kubernetes clusters

- an abstraction over infrastructure providers such as AWS, Google, Azure, OpenStack, etc.

- a way to use Kubeadm for any infrastructure

Compared to Rancher we can completely customize the Kubernetes cluster, starting at the bootstrapping phase.

To start using Cluster API, a management cluster running the Cluster API controllers and infrastructure providers needs to be set up beforehand (see Quick Start).

The recommended way to do this is currently using the clusterctl CLI.

The CLI, unfortunately, can't generate manifests to be applied using GitOps yet, so we settled on deploying it without ArgoCD for our proof of concept.

Creating a new workload cluster

To attach an OpenStack infrastructure provider, we obtain the credentials from the infrastructure provider in question (e.g. Leafcloud in our case). Using OpenStack tooling and the OpenStack Cluster API template it generates a few Kubernetes Custom Resources:

- a

Clusterdefining settings and a reference to theOpenStackClusterfor the workload cluster - an

OpenStackClustercontains cluster configuration specific for the infrastructure provider - a

KubeadmControlPlanespecifying configuration and the machine template to use for the control plane machines - an

OpenStackMachineTemplatespecifying the machine flavor and image to use - 1-n

MachineDeploymentfor worker nodes referencingOpenStackMachineTemplate - ..and some more

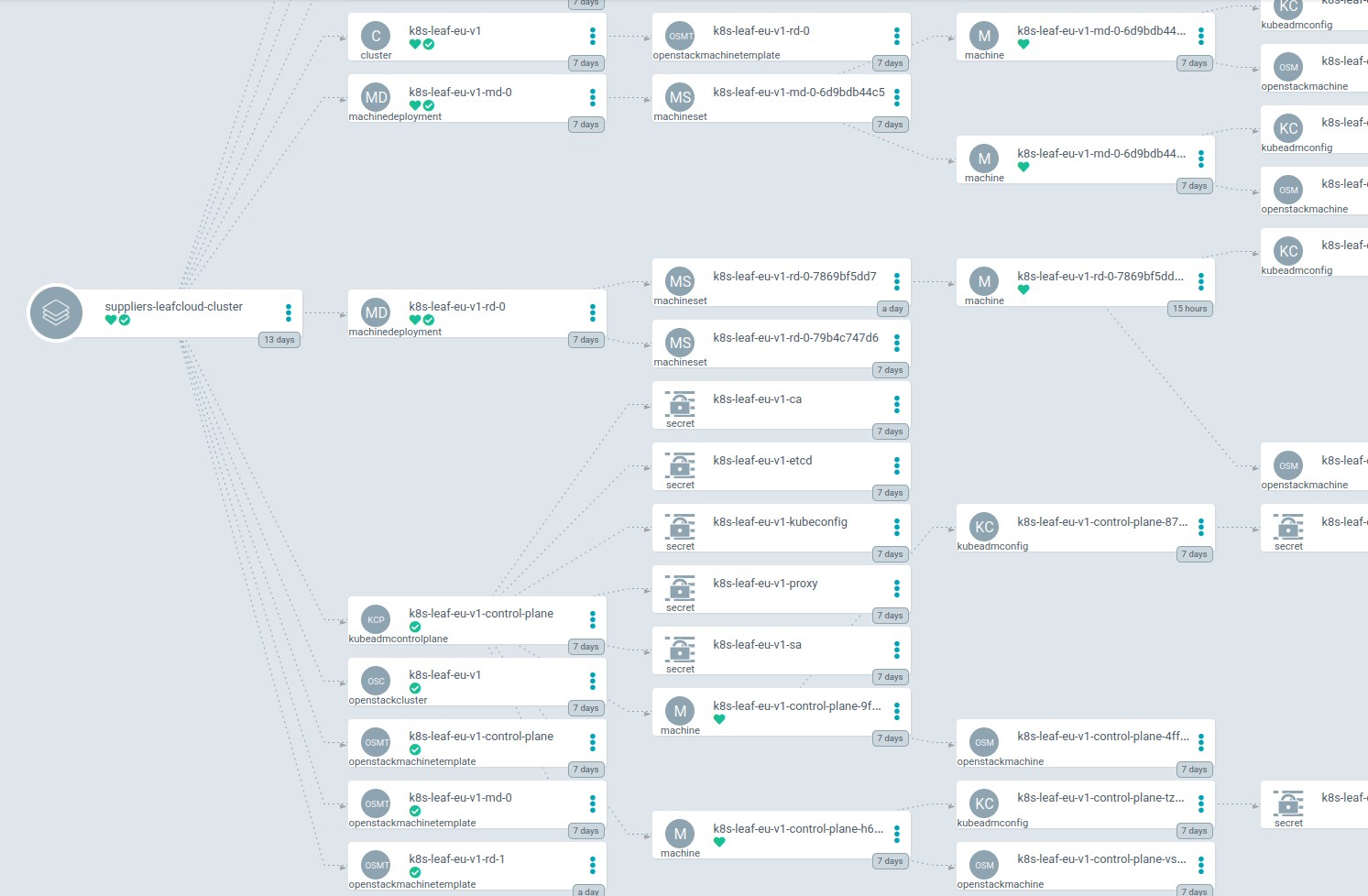

For illustration purposes have a look at how ArgoCD displays our Leafcloud cluster:

Beautiful, isn't it? A complete cluster represented using Kubernetes custom resources.

After having generated these resources (in YAML representation), we apply them using ArgoCD and a new cluster appears within a couple of minutes. Of course, this is a bare-bones cluster, we need a few things on top of it to make it work:

- Calico (v3.20.2) for networking (CNI)

- Cinder (v1.4.9) for storage (CSI)

- OpenStack Cloud Controller (out of tree, v1.1.2) to manage services, volumes, ... specific to OpenStack

- Cluster autoscaler (v9.10.8) to scale worker nodes up and down based on workload

- Metrics, Logging, Argo Workflows, custom operators, ...

From start to the first workload running on Leafcloud, it took us about a week for the first OpenStack cluster. The second was created and running within a day.

I can't believe it's that simple. Amazing how well that works.

It astonished us how well it works. Using Cluster API we're able to bootstrap new clusters in no time. While we only tested OpenStack-based infrastructure providers so far, we're positive that different providers will work similarly. We already started planning to move our other clusters to Cluster API too.

Demo

The following screen recording shows how workload gets scheduled on Leafcloud and how it affects our view of the infrastructure.

Helio Render Client + Helio Core + LeafcloudLooking back

Cluster API is working well and if you have similar needs you should try it out.

Of course, not everything is shiny. There is still a lot going on until everything works. Understanding Cluster API and OpenStack from scratch took us quite a while, too. The documentation could be sometimes a bit better (especially OpenStack's documentation is hard to understand as a newcomer).

The main remaining issue we have open is the cluster autoscaler's ability to scale to/from zero for Cluster API-based clusters.

For the cluster autoscaler to be able to scale node groups from/to zero, the implementation needs to be able to retain information about the

machines' hardware capabilities (CPU, Memory, GPU, ...) and possible taints & labels (for nodeSelector). This is currently not

implemented but a proposal exists

and there should be a first PR ready within a few weeks.

We can't wait to test this because scaling to/from zero is very important for us to be able to optimize the resource footprint as much as possible.

Our setup is not fully automated yet. It still takes quite a few manual steps to add a new cluster. Yet with Cluster API, ArgoCD, and related tooling we have the tools to build a completely automated solution. We could even provide a way for suppliers to onboard themselves.

We can't wait to continue with this infrastructure setup. Get in contact or book a call with the founders, if you would like to take part.